what are the python modules used to get the data from rest api

How to Pull Data from an API using Python Requests

Technical API how-to without the headache

The affair that I'm asked to do over and over over again is automate pulling data from an API. Despite property the title "Data Scientist" I'thousand on a small team, and then I'thousand non just responsible for edifice models, but also pulling data, cleaning information technology, and pushing and pulling it wherever it needs to go. Many of you are probably in the same boat.

When I first began my journeying for learning how to make HTTP requests, pull dorsum a JSON cord, parse it, and then push it into a database I had a very hard time finding clear, concise articles explaining how to actually practice this very important task. If you lot've ever gone down a Google black pigsty to resolve a technical trouble, you've probably discovered that very technical people like to use technical language in order to explain how to perform the given chore. The problem with that is, if you're cocky-taught, as I am, you non only have to learn how to do the task you've been asked to do but likewise learn a new technical linguistic communication. This can be incredibly frustrating.

If yous want to avoid learning technical jargon and only become straight to the point, you've come up to the right place. In this article, I'm going to show you how to pull information from an API and then automate the task to re-pull every 24 hours. For this instance, I will utilize the Microsoft Graph API and demonstrate how to pull text from emails. I will refrain from using pre-prepared API packages and rely on HTTP requests using the Python Requests package — this mode yous tin use what you larn here to well-nigh whatever other RESTful API that you'd need to piece of work on.

If you lot're having trouble with API requests in this tutorial, hither'south a tip: Apply Postman. Postman is a fantastic app that allows yous to set up and make API calls through a clean interface. The beauty of information technology is once you get the API telephone call working, yous tin can export the code in Python and then paste it right into your script. It's astonishing.

*Note: This tutorial is meant to exist a uncomplicated and easy to understand method to access an API, that'southward it. It will likely not be robust to your exact situation or data needs, but should hopefully ready you downward the right path. I've found the below method to be the simplest to understand to quickly get to pulling data. If you have suggestions to make things fifty-fifty easier, sound off in the comments.

API Documentation

There's no style around navigating API documentation. For working with Outlook, you get here. If you are successful in following this tutorial and want to exercise more than with the API, utilise that link for a reference.

Dominance

Most APIs, including the Microsoft Graph, require authorization before they'll give you an access token that will allow you lot to phone call the API. This is often the most difficult role for someone new to pulling data from APIs (it was for me).

Register your App

Follow the instructions here to register your app (these are pretty straightforward and use the Azure GUI) You lot'll need a school, piece of work, or personal Microsoft account to register. If you utilize a work electronic mail, you'll need admin admission to your Azure case. If y'all're doing this for work, contact your Information technology department and they'll likely be able to register the app for you. Select 'spider web' every bit your app blazon and use a trusted URL for your redirect URI (ane that only you have access to the underlying web data. I used franklinsports.com as only my team can access the web information for that site. If you were building an app, you would redirect to your app to a place where your app could selection up the code and authorize the user).

Enable Microsft Graph Permissions

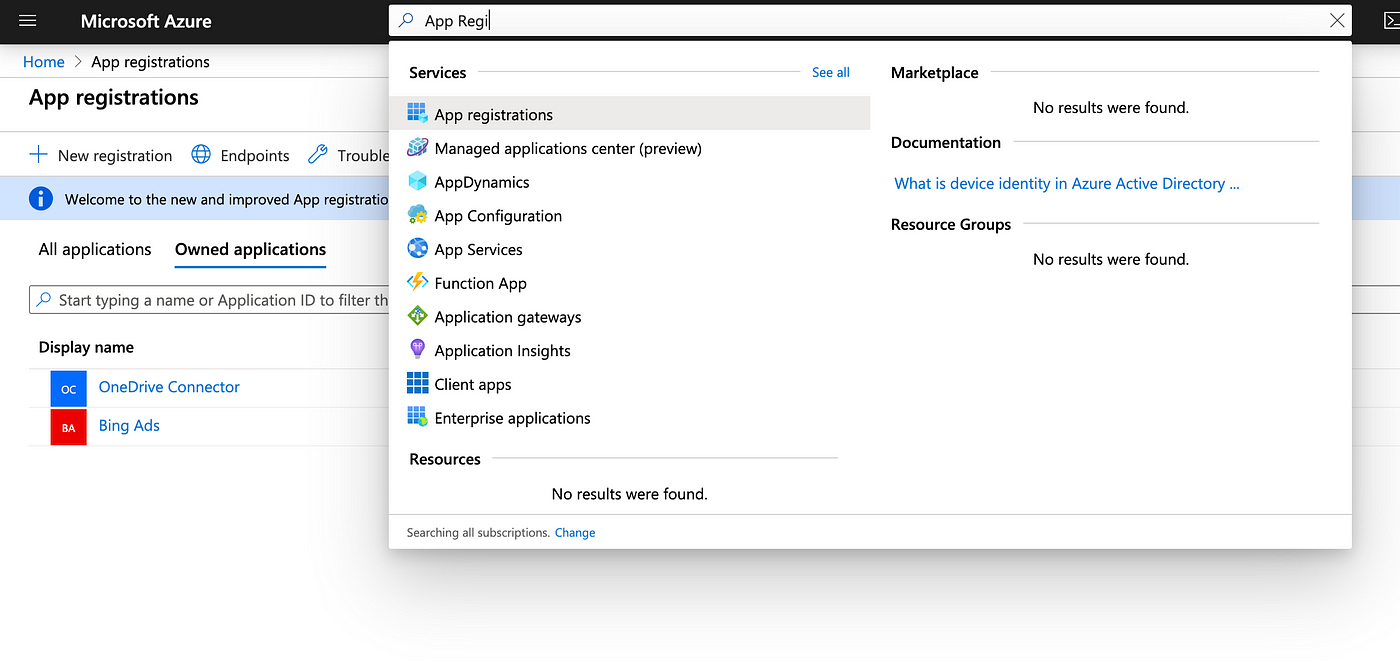

One time your app is registered, become to the App Registration portal:

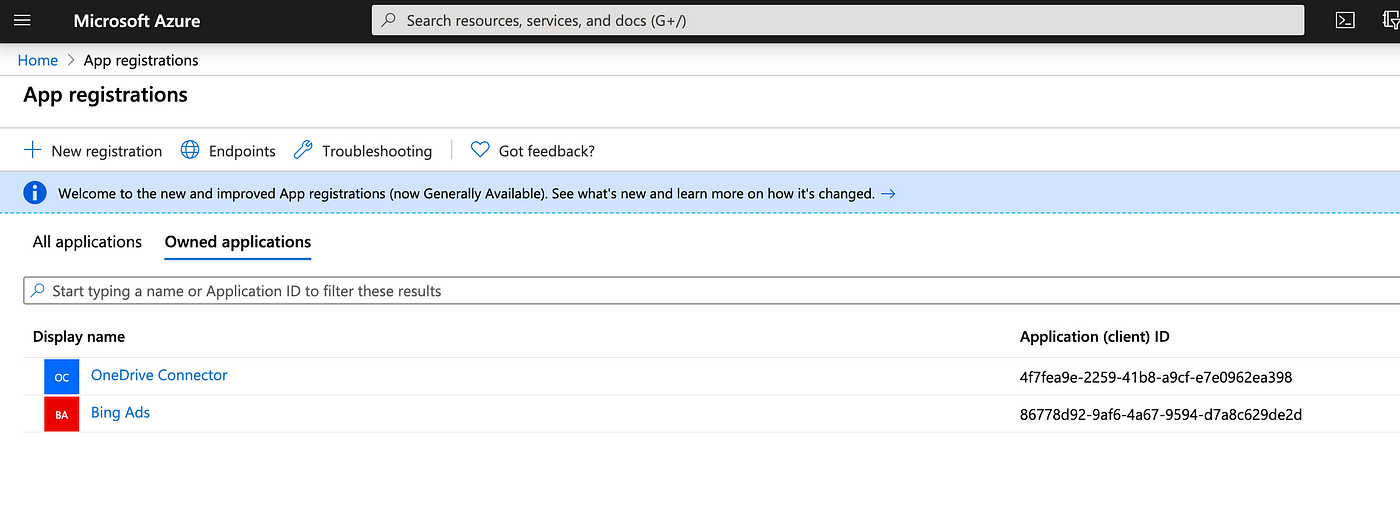

Click where it displays the name of your app:

On the following page, click on 'View API Permissions'.

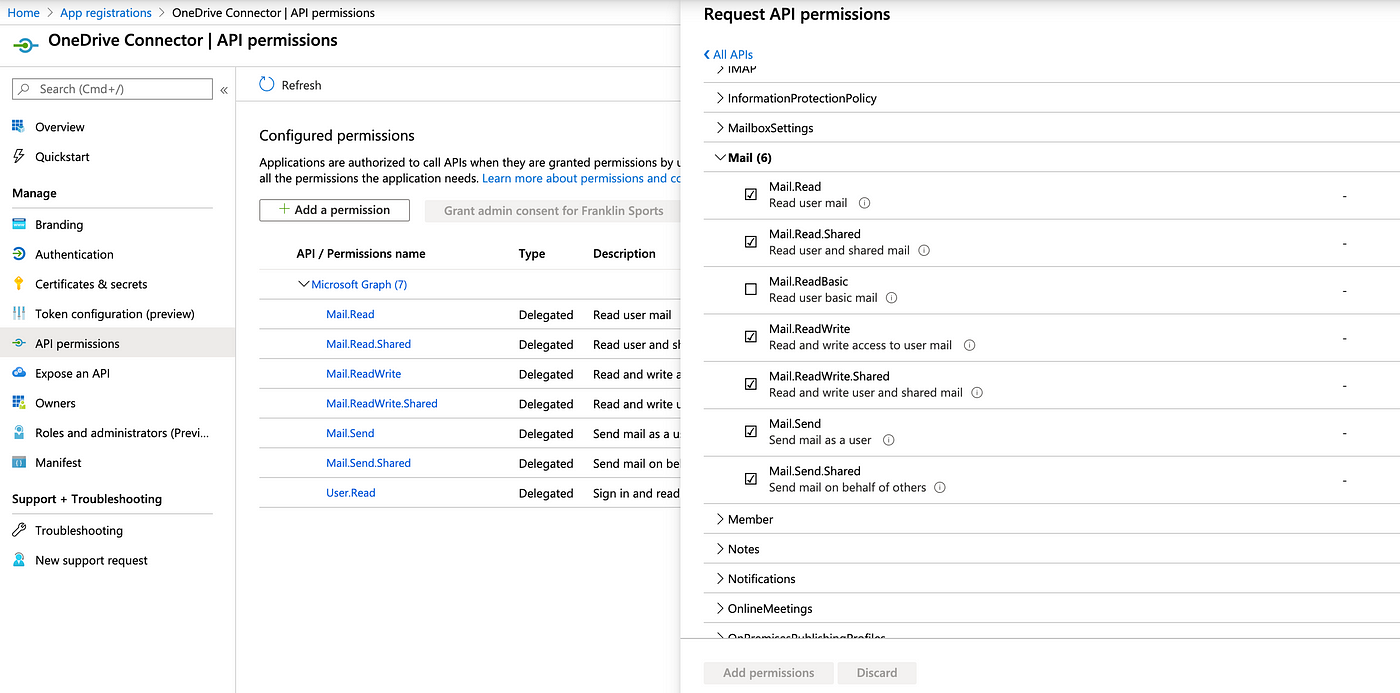

Finally, select the Microsoft Graph and and then check all the boxes under Messages for 'Delegated Permissions.' (Note for this case I'll simply exist pulling emails from a single signed-in user, to pull email data for an system you'll need application permissions — follow the flow hither).

Ok — y'all're ready to start making API calls.

Authorization Pace 1: Get an access code

You'll need an access code to get the access token that will allow you to make calls to the MS Graph API. To practise this, enter the following URL into your browser, swapping the appropriate credentials with the information shown on your app page.

Goes in the URL:

Take the URL that outputs from the code snippet and paste it into your browser. Hitting enter, and yous'll be asked to qualify your Microsoft account for delegated access. Utilize the Microsoft account that you used to annals your app.

If the authorization doesn't work, at that place'south something incorrect with the redirect URL you've called or with the app registration. I've had trouble with this office before — then don't fret if information technology doesn't work on your beginning endeavor. If the authorisation is successful, you lot'll be redirected to the URL that you used in the App registration. Appended to the URL volition be parameters that contain the code you'll use in our next step — it should look like this:

Have the full lawmaking and save it in your python script.

Authorization Step 2: Utilise your access code to get a refresh token.

Before proceeding, brand sure you accept the latest version of the Python Requests package installed. The simplest fashion to do that is by using pip:

In this pace, you volition accept the code generated in step 1 and send a POST request to the MS Graph OAuth authorization endpoint in gild to acquire a refresh token. (*Note: For security purposes, I recommend storing your client_secret on a different script than the i you utilize to call the API. Yous can shop the underground in a variable and then import it (or by using pickle, which you lot'll see below).

Swap the ####### in the code snippet with your own app information and the code yous received in step ane, and so run it. (*Note: The redirect URI must be url encoded which means information technology'll expect like this: "https%3A%2F%2Ffranklinsports.com")

Yous should receive a JSON response that looks something like this:

{'access_token':'#######################','refresh_token':'###################'}

Dominance Pace 3: Employ your refresh token to go an admission token

At this point you already have an access token and could brainstorm calling the API, however, that access token volition expire after a set amount of fourth dimension. Therefore we want to set upwardly our script to acquire a fresh admission token each fourth dimension we run it so that our automation will non break. I like to store the new refresh_token from step 2 in a pickle object, and and so load it in to become the fresh access_token when the script is run:

filename = ####path to the name of your pickle file#######

print(filename)

filehandler = open(filename, 'rb')

refresh_token = pickle.load(filehandler) url = "https://login.microsoftonline.com/common/oauth2/v2.0/token" payload = """client_id%0A=##########& redirect_uri=##################& client_secret=##################& code={}& refresh_token={}& grant_type=refresh_token""".format(code,refresh_token)

headers = { 'Content-Blazon': "application/ten-www-form-urlencoded", 'cache-control': "no-cache" } response = requests.request("POST", url, data=payload, headers=headers)

r = response.json()

access_token = r.become('access_token')

refresh_token = r.become('refresh_token') with open(filename, 'wb') equally f:

pickle.dump(refresh_token, f)

Calling the API and Cleaning and parsing the JSON

With your access_token in tow, you lot're set up to phone call the API and get-go pulling information. The beneath lawmaking snippet volition demonstrate how to retrieve the last 5 email messages with specific subject paramaters. Place the access_token (bolded beneath) in the headers and laissez passer it in the go request to the electronic mail messages endpoint.

import json

import requests url = 'https://graph.microsoft.com/v1.0/me/letters

$search="subject:###Field of study Y'all Desire TO SEARCH FOR###"' headers = {

'Authorization': 'Bearer ' +access_token,

'Content-Type': 'application/json; charset=utf-viii'

} r = requests.get(url, headers=headers) files = r.json() print(files)

The code to a higher place volition render a json output that contains your information.

If you desire more 5 emails, you'll need to paginate through the results. About APIs have thier own methods for how to best paginate results (in english, about APIs will not requite you all the data you desire with simply ane API call, and so they requite you ways to make multiple calls that allow yous to move through the results in chunks to get all or every bit much every bit you want). The MS Graph is nice in that information technology provides the exact endpoint yous demand to go to to get the side by side page of results, making pagination easy. In the below code snippet, I demonstrate how to use a while loop to make successive calls to the api and add the subject area and text of each email message to a listing of lists.

emails = []

while Truthful:

endeavour:

url = files['@odata.nextLink']

for item in range (0,len(files['value'])):

emails.append(files['value'][item]['Bailiwick'],[files['value'] [detail]['bodyPreview'])

r = requests.get(url, headers=headers)

print(r.text)

files = r.json()

except:

interruption Putting the Data Somewhere

Finally, I'll testify an case of how put the JSON data into a CSV text file, which could then be analyzed with excel or pushed into a database. This code snippet will demonstrate how to write each row of our listing of lists into a CSV file.

import csv write_file ='###LOCATION WHERE Yous Desire TO PUT THE CSV FILE###'

with open(write_file,'west', newline = '') as f:

writer = csv.writer(f, lineterminator = '\due north')

author.writerows(emails)

Automate

Now that our script is written. We desire to automate it so we tin can get new messages within our parameters as they come in daily.

I've done this in ii ways, however, there are many ways to automate:

- Using Crontab on an Ubuntu Server

This is quite simple. If your on Ubuntu, blazon into your console 'crontab -e' and it will open up your cronjobs in a text file. Go to a new line and blazon in:

0 ane * * * /full path to python surroundings/python /full path to file/example.py Assuming cron is fix upwards correctly, the higher up would ready the script to automatically run at 1 UTC every day (or whatever timezone your server is on). You can change that time to whatever y'all need it to past irresolute the 1 in the code. If you want to get complex with your cron timing, this site is a prissy resource.

2. Using Windows Task Scheduler

This is a dainty and piece of cake way to automate in windows that uses the Task Scheduler App. Simply open up Job Scheduler and click 'Create Task' on the correct hand side. This app is quite straightforward and allows y'all to set up the schedule and what plan you want to run using the GUI.

When your in the 'Actions' pain, select Python as the plan and and then in the Add together Arguments tab (the one with '(optional)' next to it), put the path to your .py file.

Thats it!

In this walkthrough, I've shown yous how to pull your email data from the Microsoft Graph API. The principles demonstrated here can exist used to piece of work with nearly whatsoever RESTful API or to do much more than complex tasks with the Microsoft Graph (I've used the MS Graph API to push files in and out of our corporate sharepoint, button data from sharepoint folders into our database, and send automatic emails and reports).

Questions or comments? Y'all can email me at cwarren@stitcher.tech. Or, follow me on Linkedin at https://www.linkedin.com/in/cameronwarren/

I likewise provide Data services, which you can larn more about at http://stitcher.tech/.

Source: https://towardsdatascience.com/how-to-pull-data-from-an-api-using-python-requests-edcc8d6441b1

0 Response to "what are the python modules used to get the data from rest api"

Post a Comment